Diffusion Models for Adversarial Purification

Purify Adversarial samples with diffusion models. Published at ICML 2022.

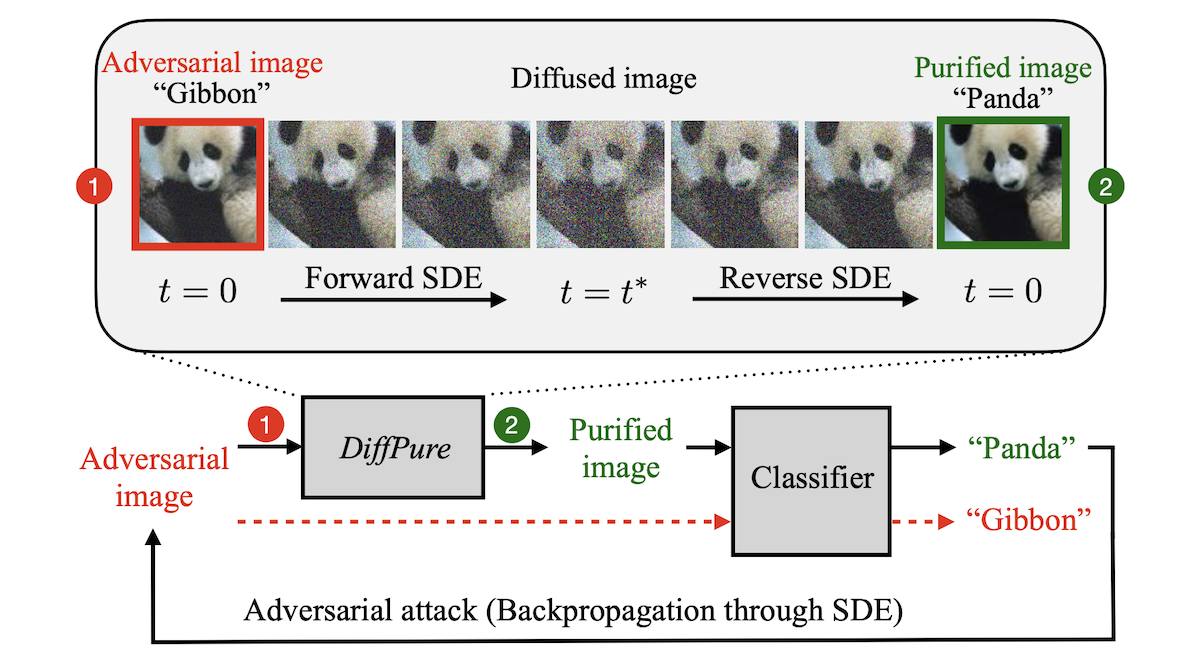

Adversarial purification is a kind of defense methods, which removes adversarial perturbations of an input image with a generative model.

Diffusion model, which has become a hotspot, is a new kind of generative model which generates new images by mimicing a diffusion process from a random input to an image. One can read this blog for details about diffusion models.

An important characteristic of diffusion models is that it models “transition states” between an image and a corresponding sample from a Gaussian distribution, i.e. images combined with different extent of Gaussian noises. It can stepwisely change a noisy image to an image with high resolution. This characteristic inspires their application in the adversarial purification:

Given a pre-trained diffusion model, add noise to adversarial images following the forward diffusion process with a small diffusion timestep $t^*$ to get diffused images, from which we can recover clean images through the reverse denoising process before classification.

The step of adding noise is important. Intuitively, it pulls the input image into the distribution of training transition images, so that by reverse denoising, we can convert it into the space of training images. Note that it requires the training data distribution of the diffusion model similar to that of the adversarial data.

An interesting fact to note that under AutoAttack on CIFAR-10, for WideResNet-70-16, this method encounters a decrease in the standard accuracy. That may due to the disparity between the reversed images and the real images. See the paper1 for more details of the experiment results.